Disclaimer I: I don't work at AWS :) but seriously think that building your own cloud (as some people call it) or setting up a private cloud doesn't really address anything apart from posting a comment in article about an outage.

Disclaimer II: Though not every one admits, some people who have not been affected during outages have been lucky. They are lucky that their Instances and EBS Volumes weren't in the affected AZ. Though people spend time on building sophisticated architecture, it is only during such outages, the architecture is put to real test. Just like how AWS themselves uncover new failure areas, the architecture will also open up new failure points during outages. And you need to work on improving them.

We just had another outage in AWS. And this time too there were a good number of popular websites that were affected by the outage - Reddit, Foursquare and Heroku are few from the list. AWS has published a detailed analysis of what caused the outage. The previous outage was on June 29th which was even more widespread taking down Netflix and others. AWS did provide a detailed analysis on what went wrong and how they are taking actions to prevent such incidents in future.

Disclaimer II: Though not every one admits, some people who have not been affected during outages have been lucky. They are lucky that their Instances and EBS Volumes weren't in the affected AZ. Though people spend time on building sophisticated architecture, it is only during such outages, the architecture is put to real test. Just like how AWS themselves uncover new failure areas, the architecture will also open up new failure points during outages. And you need to work on improving them.

We just had another outage in AWS. And this time too there were a good number of popular websites that were affected by the outage - Reddit, Foursquare and Heroku are few from the list. AWS has published a detailed analysis of what caused the outage. The previous outage was on June 29th which was even more widespread taking down Netflix and others. AWS did provide a detailed analysis on what went wrong and how they are taking actions to prevent such incidents in future.

The aftermath and reactions of such outages can be classified in to two - from people who understand Cloud and people who don't. No one likes outages. But people who understand Cloud, they clearly see outages as an opportunity to build better systems. Better architectures. And there are people who would start blaming it on the Cloud. Saying things like "this is the reality behind Cloud", or even say it is better to own than rent. Public Cloud versus Private Cloud is a huge debate. And if you have been in the Cloud market you will even hear more types of Cloud - like Hybrid Cloud.

Why Cloud?

Be it public cloud, private cloud or hybrid cloud. Be it Open Stack or Cloud Stack. One should definitely know why they need a Cloud strategy. Are you getting into Cloud for

- Scalability

- High Availability

- Cost

- Getaway from infrastructure management

- Everyone is getting in to Cloud

- My resume needs to show I know Cloud

Be honest in your answer to this evaluation because the benefits vary depending upon where you fit in to the above classification. Of course, every one is concerned about cost and would like to keep costs low. But there are other invisible costs that doesn't stand out normally. The cost of slow performing website, the cost of a lost customer are not seen directly as against infrastructure cost. And designing scalable systems within a specific cost is an even bigger challenge.

Hardware fails

It has to be understood that hardware fails all the time. The Cloud Service Provider doesn't setup some magic data center where hardware doesn't fail. And if some one advises you towards private cloud because of such outages in public cloud, be wary that such hardware failures will happen in private cloud setup as well. In contrast, because of the scale at which the public cloud service provider is operating, there is massive automation in place. There are systems what will detect failures well ahead of time and act on its own. There are disk's that reach their lifetime and they get replaced automatically. Those disks will go through a purge cycle to be destroyed completely as per the policy of service provider. Servers get replaced, security systems get updated and network gets upgraded. All these are happening continuously and transparently. Probably as an every day routine in all the data centers. So such things need to happen if you are going to setup a private cloud

Efficiency

Amazon.com is successful because they mastered retail and are operating it at cut throat efficiency. They have successfully replicated the same model into AWS. At any given point of time, AWS tries to operate their data centers at maximum efficiency. That's where they make the most of the buck. By continuously operating at that efficiency and because of the economies of scale, they are also able to pass on the benefits as cost reduction to end customers (without even any significant competition). Whether a private cloud setup or another service provider that would operate at that efficiency and continue to automate is something that one has to evaluate.

With that as a background, here are some thoughts on keeping your website highly available.

Availability Zones

I read your mind. "Yeah. I know. You gonna tell me to use multiple Availability Zones right?". Yes, almost the first thing that you will notice in any article which talks about overcoming outages is utilizing multiple Availability Zones (AZs). So is there something new that I am gonna talk about? Yes.

Hardware fails

It has to be understood that hardware fails all the time. The Cloud Service Provider doesn't setup some magic data center where hardware doesn't fail. And if some one advises you towards private cloud because of such outages in public cloud, be wary that such hardware failures will happen in private cloud setup as well. In contrast, because of the scale at which the public cloud service provider is operating, there is massive automation in place. There are systems what will detect failures well ahead of time and act on its own. There are disk's that reach their lifetime and they get replaced automatically. Those disks will go through a purge cycle to be destroyed completely as per the policy of service provider. Servers get replaced, security systems get updated and network gets upgraded. All these are happening continuously and transparently. Probably as an every day routine in all the data centers. So such things need to happen if you are going to setup a private cloud

Efficiency

Amazon.com is successful because they mastered retail and are operating it at cut throat efficiency. They have successfully replicated the same model into AWS. At any given point of time, AWS tries to operate their data centers at maximum efficiency. That's where they make the most of the buck. By continuously operating at that efficiency and because of the economies of scale, they are also able to pass on the benefits as cost reduction to end customers (without even any significant competition). Whether a private cloud setup or another service provider that would operate at that efficiency and continue to automate is something that one has to evaluate.

With that as a background, here are some thoughts on keeping your website highly available.

Availability Zones

I read your mind. "Yeah. I know. You gonna tell me to use multiple Availability Zones right?". Yes, almost the first thing that you will notice in any article which talks about overcoming outages is utilizing multiple Availability Zones (AZs). So is there something new that I am gonna talk about? Yes.

- AZs are data centers and they are isolated. Some AZ's might have multiple data centers supporting them. In the sense that they have their own independent power, diesel generators, cooling and outbound internet connectivity. And they are not isolated across coast or cities. They are just near by data centers having low latency network between them

- AZs have low latency between them. But there is a latency. And it might be considerable for you depending upon the number of concurrent requests that your application is expected to handle. So if you have the application servers distributed across AZ-1 and AZ-2 and database only in AZ-1, the AZ-2 application will experience a latency when talking to the DB

So make use of multiple AZ's. If you are planning for High Availability at web layer you will have to have a minimum of two EC2 Instances. Launch them across two different AZ's. Each region in AWS has at least two AZ's and any new regions that will be launched by AWS will have at least two AZ's. If you look at the latest outage, it was specific to a particular AZ. So infrastructure spanning across multiple AZ's weren't completely down. But then why Reddit went down? I will talk about that a little later.

Elastic Load Balancing

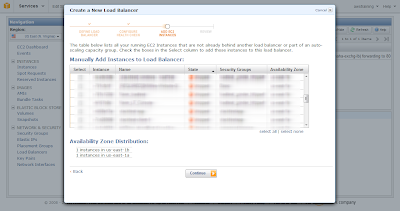

Elastic Load Balancing (ELB) is the load balancing service from AWS. When you look at ELB, there are two things that you should pay attention to. One - ELB servers. The EC2 Instances that AWS will be using to run the ELB service. Two - the servers behind the ELB which are your web/app servers.

- ELB, being a service from AWS has HA, scalability and fault tolerance built into it. The Instances that AWS will be provisioning to support the ELB service will span across multiple AZ's and will automatically scale on its own. All of this are abstracted to us and it is an obvious guess that AWS will utilize multiple AZ's by themselves

|

| Configuring ELB's to distribute traffic across multiple AZ's |

- For your web/app Instances that sit behind ELB, you have an option to specify the AZ's that the ELB has to support while creating it. For example, when you create an ELB in US-East-1 region, you can mention "us-east-1a" and "us-east-1b" to be supported by the ELB. ELB will now distribute the incoming traffic between the AZ's. Now why is this important? Because, if you specify multiple AZ's for ELB, it results in two things

- The Instances that ELB creates for load balancing will created in all the AZ's that you mention. This means there is a AZ level HA for load balancing Instances itself

- If there is an AZ level failure, then the ELB service will route the traffic to the load balancing Instances in the other AZ thereby continuing the service operation

- So why ELB service was interrupted during the outage? It appears that there was a software bug in the traffic diversion software which resulted in traffic still being routed to the load balancing Instances in the affected AZ itself. Looks like AWS is fixing that bug pretty soon

AutoScaling

AutoScaling will automatically scale up/down your Instances according to the pre-configured triggers (based on CPU, Network, etc...). AutoScaling works in conjunction with ELB where it also gets signals from ELB. AutoScaling can be configured with multiple AZ's as follows

as-create-auto-scaling-group AutoScalingGroupName --availability-zones value[,value...] --launch-configuration value --max-size value --min-size value [--cooldown value ] [--load-balancers value[,value...] ] [General Options]

Once configured, when AutoScaling launches/terminates Instances it will distribute across the configured AZ's. So if the scale up condition is "2" (launching two Instances), and AutoScaling has been configured with "us-east-1a" and "us-east-1b" then AutoScaling will launch one Instance in each AZ.

Go HA with 3 not 2

We know that we have to use multiple AZs. And regions like US-East has 5 AZs. How many do you use in ELB and AutoScaling? It is always better to use three AZ's at a minimum. If you are using only two AZ's and if one of the AZ is down, then the other AZ is receiving traffic twice its normal since ELB will redirect all the traffic to the other AZ. Your AutoScaling is configured to scale up by 1 and now an AZ is down as well. So by the time your AutoScaling reacts you are probably flooded with loads of traffic. And,

- You aren't alone - you are not the only one affected. There are 100s of other customers whose 1000's of Instances are affected. And they are all going to spin up Instances in an alternate AZ. Now AWS might run out of Instances in the alternate AZ's because they are already operating at 20% reduced capacity (in case of US-East)

- API error rates - normally with any such outage, the APIs start throwing up errors. You provision the infrastructure through API's or through AWS Management Console which is a UI wrapper around APIs. During an outage, AWS definitely doesn't want the other AZ's to be flooded with requests which may cause a spiral effect and make the other AZ's also reach critical infrastructure thresholds. And AWS API's themselves will be running in multi-AZ mode and probably are affected because of non-availabilty of an AZ. In such cases, I am sure there is a lot of throttling that AWS will employ at the API level to protect the API from customer requests causing a spiral effect in other AZ's

- Other AWS services - there are other services like ELB, Route53, SQS, SES, etc...which are services that will have HA built on to the them. For example, the ELB service itself to support its load balancing service not going down during an outage, might provision it's own ELB Instances in an alternate AZ's

So in effect, though multi AZ setup is the recommended setup in AWS, it is always better to use more than two AZ's (if a region has). In case of US-East, you can, say, utilize three AZ's in your AutoScaling configuration and ELB settings. The probability of two AZ's going down at the same time is less and there is a better distribution of traffic even if one AZ is down. Of course, it can be argued that we are just avoiding an eventuality. Yes, but during an outage the primary goal of anyone would be to sustain operations (even at reduced capacity) during the outage and return to normalcy at the earliest.

Understand AWS Services that are multi-AZ

The following services from AWS are inherently multi-AZ and are highly available. During an outage, these services will be still available and they would have internally failed over to another AZ.

But even Multi AZ RDS Instances were affected

Yes, and that again seems to be because of bugs in the automatic failover software. RDS relies on EBS for database storage and this outage started with problems in EBS Volumes (which was a result of improper internal DNS propagation). If the EBS of primary RDS Instance is stuck it would result in an automatic failover to the standby immediately. The failover didn't happen immediately because the software didn't anticipate the type of EBS I/O issue that happened during this outage. But for some of the RDS Instances, looks like the primary lost connectivity to the standby and the primary was still accepting transactions. This should have immediately triggered a failover. Because the standby is not receiving updates and can result in data inconsistency. But again, there seems to be a software bug which prevented such a failover. This has to be addressed immediately because RDS is a service where AWS has developed synchronous replication to the standby which isn't available in native MySQL. And that needs to definitely work perfectly all the time.

Perceived Availability

Different applications need different levels of availability. And it is easier to achieve 99.99% of availability. But pushing things forward, every extra nine comes with additional complexity in the architecture and cost. As application architects, it is also required to understand how the application end users perceive availability of a system. For example, let's consider a photo sharing website built on top of an open source CMS. If you break down the different areas in the architecture, you will be looking at the following

With such kind of integration of services that Amazon offers, you have certain portions of your website still available during an outage. For example, your users can still view photos (through CDN) but might not be able to upload new photos (at normal throughput) during outage. Users will still be able to see data from cache which is not up-to-date. Likewise, each area in the application needs to be looked at from a failure point of view and addressed accordingly.

Improve

As architects, one needs to continuously ask questions on failures in each area/tier in the architecture. What if I pull out that web server behind the ELB, will my users sessions be lost? What if that EBS volume becomes unresponsive? What if my RDS Instance is flooded with database connections? Such probing can lead in to small improvements in the architecture which can increase the overall site availability.

So why did Reddit and Heroku go down?

I am sure the engineers at Reddit and Heroku are well aware of all these and would have designed an architecture that scales and tries to keep the service up and running all the time. While, there is no information on what went really wrong, we all know that software systems are not perfect at all the time. No one designs systems that anticipates all probabilities of failure and has a fool proof mechanism to address them. Only when the system is put to use, pushed to boundaries and scales it opens up new flaws in the architecture that once we thought was perfect. I am sure Reddit is learning from areas in their architecture that went down during the outage and will make it better. Just like how Netflix wrote an article on what lessons they learnt from the previous outage.

The following services from AWS are inherently multi-AZ and are highly available. During an outage, these services will be still available and they would have internally failed over to another AZ.

- Route53 - DNS

- Elastic Load Balancing - Load Balancer

- CloudFront - Content Distribution Network

- Amazon S3 - Storage Service

- DynamoDB - NoSQL Database Service

- SQS - Simple Queue Service

- SES - Simple Email Service

But even Multi AZ RDS Instances were affected

Yes, and that again seems to be because of bugs in the automatic failover software. RDS relies on EBS for database storage and this outage started with problems in EBS Volumes (which was a result of improper internal DNS propagation). If the EBS of primary RDS Instance is stuck it would result in an automatic failover to the standby immediately. The failover didn't happen immediately because the software didn't anticipate the type of EBS I/O issue that happened during this outage. But for some of the RDS Instances, looks like the primary lost connectivity to the standby and the primary was still accepting transactions. This should have immediately triggered a failover. Because the standby is not receiving updates and can result in data inconsistency. But again, there seems to be a software bug which prevented such a failover. This has to be addressed immediately because RDS is a service where AWS has developed synchronous replication to the standby which isn't available in native MySQL. And that needs to definitely work perfectly all the time.

Perceived Availability

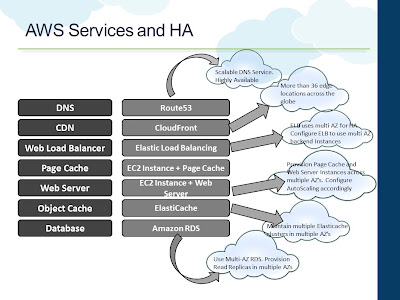

Different applications need different levels of availability. And it is easier to achieve 99.99% of availability. But pushing things forward, every extra nine comes with additional complexity in the architecture and cost. As application architects, it is also required to understand how the application end users perceive availability of a system. For example, let's consider a photo sharing website built on top of an open source CMS. If you break down the different areas in the architecture, you will be looking at the following

- A DNS service

- Content Distribution Network - serving static assets from the nearest edge location

- A front end web load balancer

- A page cache - to cache the HTML

- The web server - storing templates, etc...

- Object Cache - to cache the content, store user sessions

- Database - storing user data and other transactional data

|

| AWS Services and High Availability |

With such kind of integration of services that Amazon offers, you have certain portions of your website still available during an outage. For example, your users can still view photos (through CDN) but might not be able to upload new photos (at normal throughput) during outage. Users will still be able to see data from cache which is not up-to-date. Likewise, each area in the application needs to be looked at from a failure point of view and addressed accordingly.

Improve

As architects, one needs to continuously ask questions on failures in each area/tier in the architecture. What if I pull out that web server behind the ELB, will my users sessions be lost? What if that EBS volume becomes unresponsive? What if my RDS Instance is flooded with database connections? Such probing can lead in to small improvements in the architecture which can increase the overall site availability.

So why did Reddit and Heroku go down?

I am sure the engineers at Reddit and Heroku are well aware of all these and would have designed an architecture that scales and tries to keep the service up and running all the time. While, there is no information on what went really wrong, we all know that software systems are not perfect at all the time. No one designs systems that anticipates all probabilities of failure and has a fool proof mechanism to address them. Only when the system is put to use, pushed to boundaries and scales it opens up new flaws in the architecture that once we thought was perfect. I am sure Reddit is learning from areas in their architecture that went down during the outage and will make it better. Just like how Netflix wrote an article on what lessons they learnt from the previous outage.