Since this would be a multi part article, here's an outline in terms of how the different parts will be arranged

Let's take an e-commerce web application which has the following tiers

- First we will set the context in terms of taking a web application and identifying the areas of log generation for analysis

- Next we will define the overall log storage structure since we have logs being generated from different sources

- We will then look at each tier and look at how logs can be collected, uploaded to a centralized storage, what are the considerations

- Finally, we will look at other factors such as cost implications, alternate storage options, how to utilize the logs for analysis

Let's take an e-commerce web application which has the following tiers

- Content Distribution Network - a CDN to serve the static assets of the website. AWS CloudFront

- Web/App Server running Apache / Nginx / Tomcat on Amazon EC2

- Search Server running Solr on Amazon EC2

- Database - Amazon RDS

The first three areas are the major source of log information. Your CDN provider will provide access logs in a standard format with information such as the edge location serving the request, the client IP address, the referrer, the user agent, etc...The web servers and search servers will write access logs, error logs and application logs (custom logging by your application).

|

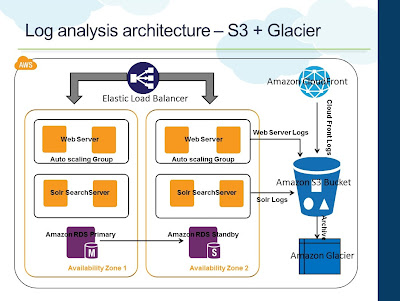

| Log Analysis Architecture |

In AWS, Amazon S3 becomes the natural choice for centralized log storage. Since S3 comes with unlimited storage and is internally replicated for redundancy, it will be the right choice for storing the log files generated by the CDN provider, web servers and search servers. Per above architecture, all of the above tiers will be configured/setup to push their respective logs to Amazon S3. We will evaluate each layer independently and look at how to setup logging and the different considerations associated.

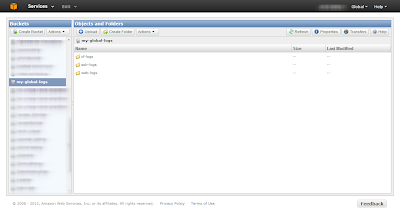

S3 Log Storage Structure

Since we have logs coming in front different sources, it is better to create a bucket structure to organize them. Let's say we have the following S3 bucket structure

|

| S3 Log Storage Bucket Structure |

- my-global-logs: Bucket containing all the logs

- cf-logs: Folder under the bucket for storing CloudFront logs

- web-logs: Folder under the bucket for storing Web Server logs

- solr-logs: Folder under the bucket for storing Solr Server logs

AWS CloudFront

AWS CloudFront is the Content Distribution Network service from AWS. With a growing list of 37 edge locations, it serves as a vital component in e-commerce applications hosted in AWS for serving static content. By using CloudFront, one can deliver static assets and streaming videos to users from the nearest edge location and thereby reducing latency, round trips and also off loading such delivery from the web servers.

Enable CloudFront Access Logging

You can configure CloudFront to log all access information during the "Create Distribution" step. You "Enable Logging" and specify the bucket to which CloudFront should push the logs.

|

| Configure CloudFront for Access Logging |

- Specify the bucket that we created above in the "Bucket for Logs" option. This field will accept only a bucket in your account and not any sub-folders in the bucket

- Since we have a folder called "cf-logs" under the bucket to store the logs, mention the name of that folder in the "Log Prefix" option

- CloudFront will start pushing access logs to this location every hour. The logs will be in W3C extended format. The logs will be compressed by AWS since the original size could be significantly large for websites that attract massive traffic

Once this is setup CloudFront will periodically start pushing access logs to this folder.

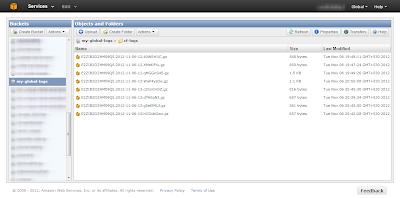

|

| CloudFront Logs |

In the next post, we will see how to configure the web tier to push logs to S3 and what are the different considerations.

No comments:

Post a Comment