Amazon Virtual Private Cloud (VPC) is a great way to setup an isolated portion of AWS and control the network topology. It is a great way to extend your data center and use AWS for burst requirements. With the latest VPC for Everyone announcement, what was earlier "Classic" and "VPC" in AWS will soon be only VPC. That is, every deployment in AWS will be on a VPC even though one might not need all the additional features that VPC provides. One might eventually start looking at utilizing VPC features such as multiple Subnets, Network isolation, Network ACLs, etc.. Those who have already worked with VPC's understand the role of NAT Instance in a VPC.

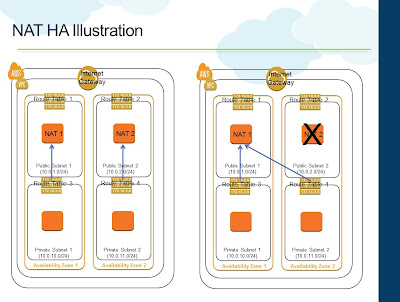

A script can be installed on both the NAT Instances to monitor each other and swap the routing table association if one of them fails. For example, if NAT1 detects that NAT2 is not responding to its ping requests, it can change the Route Table of Private Subnet 2 to NAT1 for internet traffic. Once NAT2 becomes operational again, a reverse swapping can happen. AWS has a pretty good documentation on this and a sample script for the swapping.

Apart from HA, the above architecture also provides better overall throughput, since during normal conditions, both NAT Instances can be used to drive the outbound internet requirements of the VPC. If there are workloads that requires a lot of outbound internet connectivity, having more than one NAT Instance would make sense. Of course, you are still limited with one NAT Instance per Subnet.

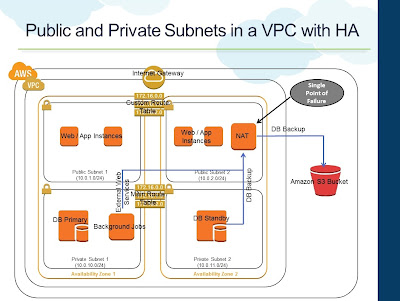

When you create a VPC, you create them with multiple Subnets (Public and Private). Instances launched in the Public Subnet have direct internet connectivity to send and receive internet traffic through the internet gateway of the VPC. Typically, internet facing servers such as web servers are kept in the Public Subnet. A Private Subnet can be used to launch Instances that do not require direct access from the internet. Instances in a Private Subnet can access the Internet without exposing their private IP address by routing their traffic through a Network Address Translation (NAT) instance in the Public Subnet. AWS provides an AMI that can be launched as a NAT Instance. Following diagram is the representation of a standard VPC that gets provisioned through the AWS Management Console wizard.

- A Public Subnet that has direct internet connectivity through the Internet Gateway. Web Instances can be placed within the Public Subnet

- The custom Route Table associated with Public Subnet will have the necessary routing information to route traffic to the Internet Gateway

- A NAT Instance is also provisioned in the Public Subnet

- A Private Subnet that has outbound internet connectivity through the NAT Instance in the Public Subnet

- The Main Route Table is by default associated with the Private Subnet. This will have necessary routing information to route internet traffic to the NAT Instance

- Instances in the Private Subnet will use the NAT Instance for outbound internet connectivity. For example, DB backups from standby that needs to be stored in S3. Background programs that make external web services calls

- Additional Subnets (Public and Private) are created in one another Availability Zone

- Both Private Subnets are attached to the Main Routing Table

- Both Public Subnets are attached to the same Custom Routing Table

- Instances in the Private Subnet still continue to use the NAT Instance for outbound internet connectivity

Though we increased the High Availability by utilizing multiple Availability Zones, the NAT Instance is still a Single Point of Failure. NAT Instance is just another EC2 Instance that can become unavailable any time. The updated architecture below uses two NAT Instances to provide failover and High Availability for the NAT Instances

|

| NAT Instance High Availability |

- Each Subnet is associated with its own Route Table

- NAT1 is provisioned in Public Subnet 1

- NAT2 is provisioned in Public Subnet 2

- Private Subnet 1's Route Table (RT) has routing entry to NAT1 for internet traffic

- Private Subnet 2's Route Table (RT) has routing entry to NAT2 for internet traffic

|

| NAT Instance HA Illustration |

A script can be installed on both the NAT Instances to monitor each other and swap the routing table association if one of them fails. For example, if NAT1 detects that NAT2 is not responding to its ping requests, it can change the Route Table of Private Subnet 2 to NAT1 for internet traffic. Once NAT2 becomes operational again, a reverse swapping can happen. AWS has a pretty good documentation on this and a sample script for the swapping.

Apart from HA, the above architecture also provides better overall throughput, since during normal conditions, both NAT Instances can be used to drive the outbound internet requirements of the VPC. If there are workloads that requires a lot of outbound internet connectivity, having more than one NAT Instance would make sense. Of course, you are still limited with one NAT Instance per Subnet.