A recap from the previous posts:

Amazon S3 folder structure

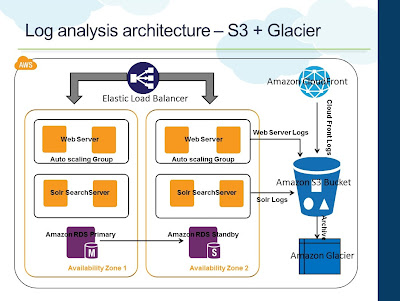

- We had seen how to configure CloudFront to enable access logs. CloudFront will directly push all access logs to a configured S3 bucket

- We saw what the considerations are for logging at the web tier - such as local log storage, dynamic environment. We configured the EC2 Instances to use the Ephemeral Storage for storing the log files locally

In this post, we will see how to push logs from the local storage to the central log storage - Amazon S3 and what the considerations are.

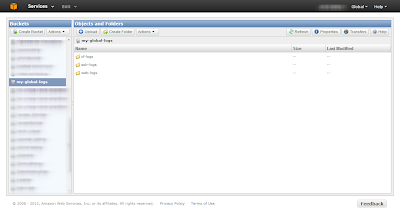

Amazon S3 folder structure

The logs are going to be generated throughout the day and hence we need to have a proper folder structure to store them in S3. Logs will be particularly useful to perform analysis such as a production issue or to find a usage pattern such as feature adoption by users/month. Hence it will make sense to store them by year/month/day/hour_of_the_day structure.

Multiple Instances

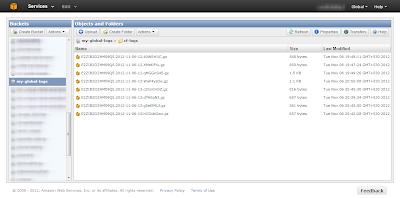

The web tier will be automatically scaled and we will have multiple Instances always running for High Availability and Scalability. So, even if we have logs stored hourly basis, we will be having log files with similar names from multiple Instances. Hence the folder structure needs to factor multiple Instances as well. The resultant folder structure in S3 will look something like this

Note in the above picture (as encircled) that we are storing "Instance" wise logs for every hour.

Log Rotation

Every logging framework will have an option to rotate the log files on size, date, etc...We will be periodically pushing the log files to Amazon S3 and hence it might make sense to say, rotate the log file every hour and push it to S3. But the downside to that is, we cannot anticipate the traffic to the web tier and that's the reason we have the web tier scaling automatically on demand. If there is a sudden surge in the traffic which may result in large log files generated, it will start filling up the file system eventually making the Instance unavailable. Hence it is better to rotate the log files by size.

Linux-logrotate

You can use the default logrotate available in Linux systems to rotate the log files on size. Logrotate can be configured to call a post script after the rotation to enable us push the newly rotated files to S3. A sample logrotate implementation will look like this:

Note: If you are using logrotate, make sure your logging framework isn't configured to rotate

Multiple Instances

The web tier will be automatically scaled and we will have multiple Instances always running for High Availability and Scalability. So, even if we have logs stored hourly basis, we will be having log files with similar names from multiple Instances. Hence the folder structure needs to factor multiple Instances as well. The resultant folder structure in S3 will look something like this

|

| Amazon S3 Log Folder Structure |

Log Rotation

Every logging framework will have an option to rotate the log files on size, date, etc...We will be periodically pushing the log files to Amazon S3 and hence it might make sense to say, rotate the log file every hour and push it to S3. But the downside to that is, we cannot anticipate the traffic to the web tier and that's the reason we have the web tier scaling automatically on demand. If there is a sudden surge in the traffic which may result in large log files generated, it will start filling up the file system eventually making the Instance unavailable. Hence it is better to rotate the log files by size.

Linux-logrotate

You can use the default logrotate available in Linux systems to rotate the log files on size. Logrotate can be configured to call a post script after the rotation to enable us push the newly rotated files to S3. A sample logrotate implementation will look like this:

Note: If you are using logrotate, make sure your logging framework isn't configured to rotate

/var/log/applogs/httpd/web {

missingok

rotate 52

size 50M

copytruncate

notifempty

create 644 root root

sharedscripts

postrotate

/usr/local/admintools/compress-and-upload.sh web &> /var/log/custom/web_logrotate.log

endscript

}

The above set of commands rotate the "httpd" log files whenever the size reaches 50M. It also calls a "postrotate" script to compress the rotated file and upload it to S3.

Upload to S3

The next step is to upload the rotated log file to S3.

Now that the files are automatically getting rotated, compressed and uploaded to S3 there is one last thing to be taken care of.

The next step is to upload the rotated log file to S3.

- We need a mechanism to access the S3 API from the shell to upload the files. S3cmd is a command line tool that is widely used and recommended for accessing all S3 APIs through the command line. We need to setup the CLI in the Instance

- We are rotating by size but we will be uploading to a folder structure that maintains log files by the hour

- We will also be uploading from multiple Instances and hence we need to fetch the Instance Id to store in the corresponding folder. Within EC2, there is an easy way to get Instance meta data. If we "wget" "http://169.254.169.254/latest/meta-data/" it will provide the Instance meta-data such as InstanceId, public DNS, etc.. For example if we "wget" "http://169.254.169.254/latest/meta-data/instance-id" we will get the current Instance Id

The following set of commands will compress the rotated file and upload them into the corresponding S3 bucket

# Perform Rotated Log File Compression

tar -czPf /var/log/httpd/"$1".1.gz /var/log/httpd/"$1".1

# Fetch the instance id from the instance

EC2_INSTANCE_ID="`wget -q -O - http://169.254.169.254/latest/meta-data/instance-id`"

if [ -z $EC2_INSTANCE_ID ]; then

echo "Error: Couldn't fetch Instance ID .. Exiting .."

exit;

else

# Upload log file to Amazon S3 bucket

/usr/bin/s3cmd -c /.s3cfg put /var/log/httpd/"$1".1.gz s3://$BUCKET_NAME/$(date +%Y)/$(date +%m)/$(date +%d)/$EC2_INSTANCE_ID/"$1"/$(hostname -f)-$(date +%H%M%S)-"$1".gz

fi

# Removing Rotated Compressed Log File

rm -f /var/log/httpd/"$1".1.gz

Now that the files are automatically getting rotated, compressed and uploaded to S3 there is one last thing to be taken care of.

Run While Shutdown

Since the web tier will automatically scale depending upon the load, Instances can be pulled off (terminated) when load decreases. During such scenarios, we might be still left with some log files (maximum of 50MB) that didn't get rotated and uploaded. During shutdown, we can have a small script, that will forcefully call the logrotate to rotate the final set of files, compress and upload.

stop() {

echo -n "Force rotation of log files and upload to s3 intitiated"

/usr/sbin/logrotate -f /etc/logrotate.d/web

exit 0

}

Use IAM

We need to provide Access Key and Secret Access Key to the S3cmd utility for S3 API access. Do NOT provide the AWS account's Access Key and Secret Access Key. Create an IAM user who has access to only the specific S3 bucket where we are uploading the files and use the IAM user's Access Key and Secret Access Key. A sample policy allowing access for the IAM user to the S3 log bucket would be

{

"Statement": [

{

"Sid": "Stmt1355302111002",

"Action": [

"s3:*"

],

"Effect": "Allow",

"Resource": [

"arn:aws:s3:::"

]

}

]

}

Note:

- The above policy allows the IAM user to perform all actions on the S3 bucket. The user will not have permission to access any other buckets or services

- If you want to restrict further, instead of allowing all actions on the S3 bucket, we can allow only PutObject (s3:PutObject) for uploading the files

- Through the above approach, you will be storing the IAM credentials on the EC2 Instance itself. An alternative approach is to use IAM Roles so that the EC2 Instance will obtain the API credentials at runtime

With that we have the web tier log files automatically getting rotated, compressed and uploaded to Amazon S3 and stored in a central location. We have access to log information by the year/month/day/hour and Instance-wise.